BIG DATA

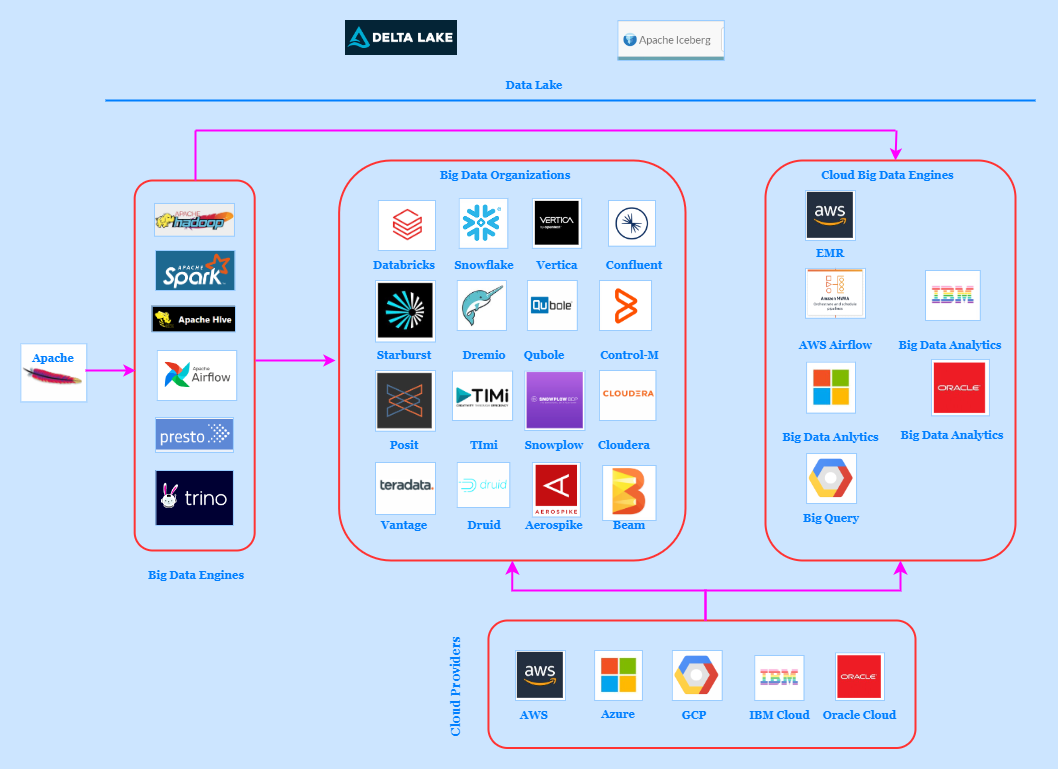

Open Source - Apache and Proprietary

We work and offer services in Big Data for both Apache open source Hadoop framework and Proprietary technologies provider like AWS, Azure, GCP, Databricks, Snowflake, Presto etc. Big Data Services includes engines - Hadoop, Hive, Spark, and Airflow with personas of Data Engineering, Data Science, Data Warehouse, Data Analyst and Data Visualization. Our Services will help in

- Big Data Product Development

- Big Data Product Support

- Big Data Service Development

- Big Data Service Support

- Data Lake

We work on wide range of big data tools and technologies. These tools and technologies are categorised in two groups broadly. First group that provides big data compute engines based on Spark or Hive or Presto or others hosted on third party clouds along with using cloud storage. Such tools and technologies are Databricks, Snowflake, Qubole, Starburst, Others. While second group that provides big data engines on Linux machine or VM or as managed services on its own clouds. These are AWS, GCP, and Azure.

OUR SERVICES

Account Creation & Configuration

General tasks for Account Creation & Configuration, these may vary based upon individual Big Data Organization.

- Identifying whether account will be created through UI or API

- Configure Product Account with IAM Roles or similar methodologies

- Configure Storage for the account

- Configure VPC or similar infrastructure

- Configure or Migrate Custom MetaStore

- Troubleshoot Account Configuration

- Configure custom Tunnel between Control and Data Planes

Cluster Infrastructure Management

General tasks for Cluster Infrastructure Management, these may vary based upon individual Big Data Organization.

- Create and Configure Bigdata Clusters

- Create Custom Bootstrap Script

- Add User Defined Function (UDF)

- Add Custom JAR File

- Create and Configure Environment for custom packages and usages

- Configure Caches and Loggings

Platform Stability & Engines Optimization

General tasks for Platform Stability & Engines Optimization, these may vary based upon individual Big Data Organization.

- Check the Components during continuous operations.

- Check the Components which break during scaling up

- Optimize and Troubleshoot Bigdata jobs.

- Tune Bigdata engines with custom utilities (e.g. Sparklens for Spark).

Schedulers Management

General tasks for Schedulers Management, these may vary based upon individual Big Data Organization.

- Schedule a job with Platform Scheduler

- Create and Deploy DAG to an Airflow Cluster

- Create DAG to Schedule an External Job

- Troubleshoot Scheduler and DAG

- Migrate an Existing DAG to Others

- Integrate with External Airflow Schedule

Query Optimization

General tasks for Query Optimization, these may vary based upon individual Big Data Organization.

- Hive Query Optimizations.

- Big Data engines or cluster optimizations for faster query execution.

- Workflow Optimizations.

- Dynamic Query routing based on workloads on nodes.

Integrations

General tasks for Integrations, these may vary based upon individual Big Data Organization.

- Integrate with Visualization Tools and Technologies.

- Integrate with Streaming Tools and Technologies.

- Integrate with Data Tools or Studios.

- Integrate with Big Data Tools and Technologies.

- Integrate with Third-party tools with ODBC/JDBC/SDK/API

Monitoring & Health Checks

General tasks for Monitoring & Health Checks, these may vary based upon individual Big Data Organization.

- Assessing all Infrastructure and Applications components for Monitoring and Health Checks.

- Mapping and Tuning Identified components with projects.

- Defining Criteria's, Logic, and Thresholds.

- Making flow of Monitoring Data Flow considering overall project or specific.

- Identifying Monitoring and Health Checks for Internal and External usages.

- Identifying Tools / Technologies /Software’s.

- Identifying Out-of-Box monitoring Features.

- Integrating all and making monitoring & Health Checks working.

Cloud Usage & Optimization

General tasks for Cloud Usage & Optimization, these may vary based upon individual Big Data Organization.

- Identifying and Working on Billing and Pricing Framework.

- Devising Usage Data Collections and Calculations.

- Making and Working on Usage Applications and Systems used by Customers.

- Validating Usage Data.

Security & Vulnerabilities Updates

General tasks for Security & Vulnerabilities Updates, these may vary based upon individual Big Data Organization.

- Manage Access to Bigdata Platform or Engines.

- Configure Encryption for Data at Rest and in Transit.

- Security Review on Data Requests.

- Configure Bigdata Authorization (e.g. Hive or Ranger).

- Checking Qualys Scan, PenTest, and other Vulnerabilities (e.g. log4j).

- Checking CVE (nvd.nist.gov/vuln) for Big Data or related area.

Migration

General tasks for Migration, these may vary based upon individual Big Data Organization.

- Migrate SQL Job to HIVE SQL.

- Migrate User Metastore from one env to other.

- Migrate Database from one environment to another.

- Upgrade Database to higher versions.

- Upgrade engines (Hadoop or Hive or Spark or Airflow or Others) to higher versions.

OUR BIG DATA METHODOLOGY

A Study of Troubleshoot the Complex System

Our methodology is the combination of primary & secondary research in Big Data area and our framework developed while working on complex Big Data projects over time.

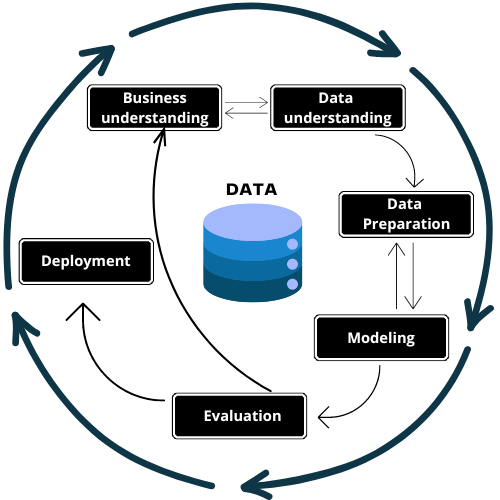

Almost all organization reaches to Big Data Maturity level during its journey of operation. The organization which implements Big Data first time, does assessment first. There are various frameworks used to assess. These frameworks are KDD, CRISP-DM, SEMMA, OSEMN, and TDSP. Out of these, CRISP-DM is widely used. While TDSP – Team Data Science Process is new and recently developed by Microsoft. The organization, which is already practicing Big Data from some time or long time, faces different set of challenges. The organization is not able to encash the value locked away within that data despite of massive growth of data. There are a number of underlying cascaded challenges which prevents to get the values.

There are few facts, these are not going to change

- Data will remain distributed, stored all over the place, and will crop up in more and more systems.

- Migrating data in a single location, data warehouse, through complex and expensive ETL to make it logical.

- Lack of optimized query that can queries all data sources.

- Sometimes it is not known where data is even available for you to use, and only tribal knowledge in the company, or years of experience with internal setups, can help you find the right data.

The following is the our approaches to make Big Data Implementation and maintenance simpler,

- Identifying technical view and definition of layered Business unit out of the organization's data ecosystem.

- Making simpler components suitable for federated query and its executions considering suitable compute and storage once identified.

- Unique way of error analysis of technical issue troubleshooting.

Training for Technical Users

Big Data Core

We offer training program on Big Data Core concept.

This training program will help individual to make strong foundation in the Big Data ecosystem. The individual will know about storing data in Hive tables on the top of HDFS file systems, running query and job on Spark engine, and automating different workflow with Airflow DAG.

Course Duration: 3 Months

Big Data Professional

We offer training program on Big Data Professional concept. This training program is the combination of training on Big Data Core concept and training of any of the Big Data product, such Databricks or Snowflake (or Others). This training program will help individual to be industry ready and to ready to work in the Organizations.

Course Duration: 6 Months

Big Data Product

We offer training program on Big Data Product, such as Databricks, Snowflake, Starburst, and others. The complete list of products, that we offer training, is at detail page. This training program is about knowing the product architecture, knowing about all product features in practical, and functioning of the product in all aspects. This training program will help individual to be industry ready to work in the Organizations.

Course Duration: 3 Months

Training for Business Users

Business Analytics

We offer training program on Business Analytics. This training program is for non-technical user or individual who wants to know technical aspects and do the hands-on exercise. This course's main goals include laying a strong foundation in analytics fundamentals, developing data manipulation and analysis skills, applying analytical methods to real-world issues, mastering data visualization and communication, comprehending ethical and legal issues, industry relevance, teamwork and collaboration, and encouraging a mindset of continuous learning. This training program will help individual to be industry ready to work in the Organizations.

Course Duration: 3 Months