Big Data Core Training

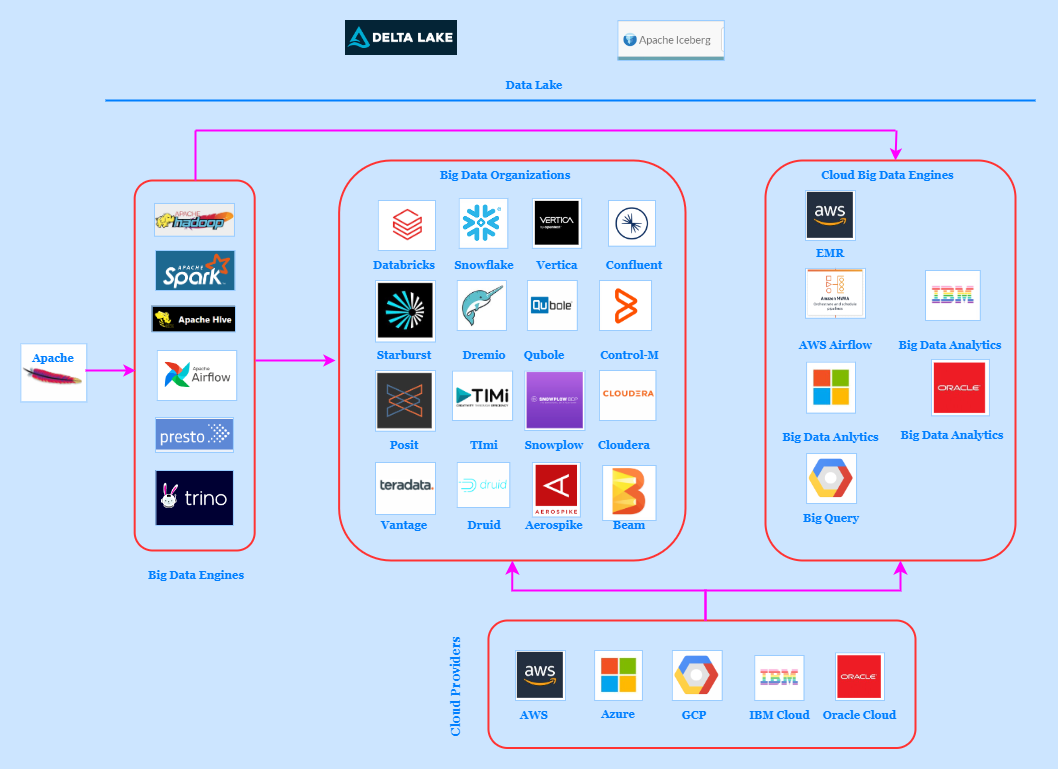

We offer training program on Big Data Core concept. This training program will help individual to make strong foundation in the Big Data ecosystem. The individual will know about storing data in Hive tables on the top of HDFS file systems, running query and job on Spark engine, and automating different workflow with Airflow DAG.

Course Duration: 3 Months

Course Objectives

- Upskill and Reskill.

- Practical knowledge of Big Data ecosystem and its working.

- Knowledge of how to develop real time udfs and analyse data.

- Knowledge of how to write real time SQL.

- Makes ease to understand the comprehensive SQL queries for business use cases.

- Help in how to configure these technologies to work seamlessly together, ensuring efficient data processing and analysis.

- Helps in to define and schedule complex data processing workflows, improving efficiency and automation.

- Executing Big Data queries under various use cases.

- Visualizing and making insights out of outcomes.

Course Topics

- Installations and Configuration of Hadoop, Hive, Spark, and Airflow.

- Configurations of Hadoop for Hive, Spark, and Airflow.

- Configurations of Hive for Hadoop, Spark, and Airflow.

- Configurations of Spark for Hadoop, Hive, and Airflow.

- Configurations of Aiflow to run query against Hadoop, Hive, and Spark.

- Configuring and Working on custom metastore (MySql or others) in Hive.

- Creating database and tables for real-time data sets (TPC-DS).

- Installation and configuration of DBeaver.

- Loading data and running business queries against Hive.

- Installation and Configuration of Jupyter notebook for PySpark.

- Creating DAG in Airflow for different tasks.

- Deploying DAG on Airflow.

- Running queries from Spark and Airflow against Hive.

- Running queries from Airflow against Hive and Spark.

Course Methodology

- Big data maturity model : TDWI or CSC or Others

- CRISP-DM : The CRoss Industry Standard Process for Data Mining (CRISP-DM) is a process model that serves as the base for a data science process.

- SEMMA : SEMMA is a list of sequential steps developed by SAS Institute, one of the largest producers of statistics and business intelligence software.

- OSEMN : OSEMN stands for Obtain, Scrub, Explore, Model, and iNterpret. It is a list of tasks a data scientist should be familiar and comfortable working on.

- TDSP : The Team Data Science Process (TDSP) is a method for developing predictive analytics solutions and intelligent applications in a cost-effective and timely manner.

- TPC-X : Transaction Processing Performance Council benchmark for Hadoop, DS (Decision support), DI (Data Integration), AI (Artificial Intelligence).